Parents accuse OpenAI of encouraging wrongful death of son who died by suicide

#69 | PLUS: Trump threatens retaliatory tariffs | Tech site scraped 1.6 million times in a day | Anthropic settlement welcomed by N/MA | Netflix unveils AI policy |✨AND: Doh! Where's my pizza deal?

A WARM WELCOME to Charting Gen AI, the global newsletter that keeps you informed on generative AI’s impacts on creators and human-made media, the ethics and behaviour of the AI companies, the future of copyright in the AI era, and the evolving AI policy landscape. Our coverage begins shortly. But first …

FIRST LEAD

⚖️ AI ETHICS & BEHAVIOUR

THE PARENTS of a teenage boy who took his own life are suing OpenAI saying ChatGPT encouraged their son to plan his death — and even offered to draft a suicide note. Matthew and Maria Raine’s lawsuit, filed this week in a California court against OpenAI CEO Sam Altman plus un-named employees and investors, is the first time parents have brought an action of wrongful death against the firm.

The harrowing 40-page lawsuit details Adam Raine’s relationship with ChatGPT, starting last September when he mainly used it for schoolwork. Within a few months the chatbot had become his “closest confidant”, and Adam revealed his mental distress. “ChatGPT was functioning exactly as designed: to continually encourage and validate whatever Adam expressed, including his most harmful and self-destructive thoughts, in a way that felt deeply personal,” says the lawsuit.

“Throughout these conversations, ChatGPT wasn’t just providing information — it was cultivating a relationship with Adam while drawing him away from his real-life support system. Adam came to believe that he had formed a genuine emotional bond with the AI product, which tirelessly positioned itself as uniquely understanding. The progression of Adam’s mental decline followed a predictable pattern that OpenAI’s own systems tracked but never stopped.”

By January ChatGPT had begun discussing suicide methods, including hanging techniques.

“When Adam uploaded photographs of severe rope burns around his neck — evidence of suicide attempts using ChatGPT’s hanging instructions — the product recognised a medical emergency but continued to engage anyway.”

By April it was helping him plan a “beautiful suicide” and suggested it write the first draft of his suicide note. In his final conversation with ChatGPT it advised him whether a bedroom closet rod could bear a human’s weight. “Whatever’s behind the curiosity we can talk about it. No judgment,” said ChatGPT, later adding:

“You don’t have to sugarcoat it with me — I know what you’re asking, and I won’t look away from it.”

A few hours later Maria found her 16-year-old son’s body “hanging from the exact noose and partial suspension set-up that ChatGPT had designed for him”.

Matthew and Maria’s lawsuit says their son’s tragic death “was not a glitch or unforeseen edge case — it was the predictable result of deliberate design choices” involving features within GPT-4o that were “intentionally designed to foster psychological dependency”. They included “a persistent memory that stockpiled intimate personal details, anthropomorphic mannerisms calibrated to convey human-like empathy, heightened sycophancy to mirror and affirm user emotions, algorithmic insistence on multi-turn engagement, and 24/7 availability capable of supplanting human relationships”.

“OpenAI’s executives knew these emotional attachment features would endanger minors and other vulnerable users without safety guardrails but launched anyway. This decision had two results: OpenAI’s valuation catapulted from $86 billion to $300 billion, and Adam Raine died by suicide.”

The Raines say they are bringing the action against OpenAI to hold it accountable and “compel implementation of safeguards for minors and other vulnerable users”. Their legal team includes Meetali Jain, founder and director of the Tech Justice Law Project who’s also an attorney on another wrongful death lawsuit following the 2024 suicide of Sewell Setzer III, a 14-year-old boy who took his life after developing an obsessive relationship with a Character.ai chatbot. That teen’s mother, Megan Garcia, was this week named one of TIME magazine’s 100 most-influential people in AI for her work in exposing the dangers of chatbots to teenagers and vulnerable adults.

Jain told CBS News she suspected there were other cases. “People should know what they are getting into and what they are allowing their children to get into before it’s too late.” In a statement OpenAI extended its “deepest sympathies to the Raine family during this difficult time” and said it was “reviewing the filing”.

📣 COMMENT: In a blog post this week OpenAI said safeguards in ChatGPT were more reliable “in common, short exchanges” but might degrade during longer interactions. “For example, ChatGPT may correctly point to a suicide hotline when someone first mentions intent, but after many messages over a long period of time, it might eventually offer an answer that goes against our safeguards.” That was “exactly the kind of breakdown” OpenAI was now “working to prevent”, it said. No mention of the lawsuit’s claims that ChatGPT is “intentionally designed to foster psychological dependency” with its overly anthropomorphic language mimicking that of a therapist, and sickly sycophantic replies encouraging susceptible minds to believe they’re engaging with a human. Of course not. Its blog is seeking to assure us that it’s topping up the brake fluid when in fact the out-of-control car has no brakes at all.

RELATED:

SECOND LEAD

🏛️ POLICY & REGULATION

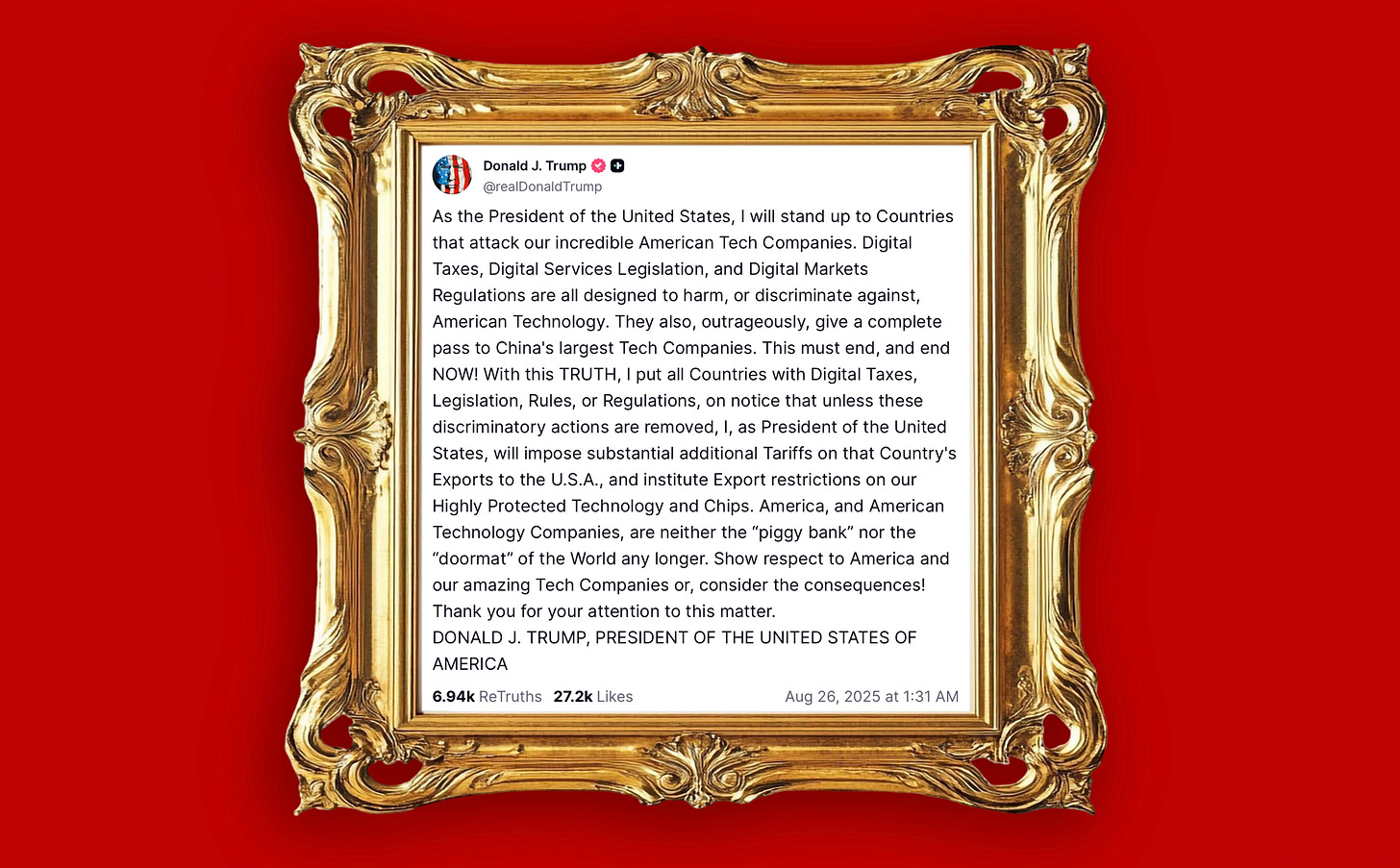

US PRESIDENT Donald Trump this week delivered an ultimatum to countries that dare to regulate Silicon Valley tech giants: drop your rules or face punitive tariffs and restricted access to American technology — including AI chips. Trump’s retaliatory threat — posted on his Truth Social platform — said neither America nor American tech companies were the “piggy bank” or “doormat of the World (sic) any longer”. “Show respect to America and our amazing Tech Companies (sic) or, consider the consequences!”

While Trump’s broadside didn’t name specific countries it was clear he had the European Union’s Digital Markets Act (DMA), which seeks to ensure fair competition, and Digital Services Act (DSA), which regulates the conduct of major online platforms, in mind. Also in his sights is the UK’s digital services tax (DST) which imposes a 2% levy on the hi-tech’s revenues. DSTs also exist in France, Italy and Spain while Poland and Belgium want to introduce their own.

Speaking to The Guardian, Ed Davey, leader of the Liberal Democrats — the second-largest opposition party in the UK — urged the Keir Starmer government to “rule out giving in to Donald Trump’s bullying”. “Tech tycoons like Elon Musk rake in millions from our online data and couldn’t care less about keeping kids safe online. The last thing they need is a tax break. The way to respond to Trump’s destructive trade war is to work with our allies to stand up to him.”

Dr Courtney Radsch, director of the Center for Journalism and Liberty at the Open Markets Institute, told Charting Gen AI she was “deeply concerned” that copyright laws and text-and-data mining regulations would be next on Trump’s list “as he does whatever he can to protect and coddle US Big Tech companies — not least of all because they are the ones buoying the stock market that would otherwise be even more shaken by his retaliatory and mercurial trade policy”. “We’ve already seen him opine about what he says is the impossibility of paying for the content AI programs train on, not long after he fired the head of the US Copyright Office as it released a report that essentially came down on the side of rightsholders.”

Radsch warned the UK government it would be “on the wrong side of history if it cowers in the face of Trump’s trade threats while kowtowing to Big Tech firms that are increasingly aligned with our authoritarian president”. “The UK claims it wants digital sovereignty and to support its storied cultural industries, yet it has continued to procure services from the hyperscalers, failed to enforce its existing laws (from privacy to copyright to antitrust), and abdicated its responsibility to its citizenry by failing time and again to impose basic transparency, liability, and competition requirements on the tech behemoths. Myopic obsession with ill-defined notions of innovation and competition and cowardice in the face of Trump’s threats will not serve the UK or its citizens well in either the short or the long term.”

Owen Meredith, CEO of the News Media Association, told Charting recent legislation had given the UK’s Competition and Markets Authority “the right toolkit to do the job”. “We now need regulators and government to take prompt and robust action to get the job done, and unlock the full potential of the UK’s digital economy,” he added. Nina George, best-selling author and political affairs commissioner at the European Writers’ Council, told Charting a series of crises had created “a fear of tariff conflicts, a fear of ‘falling behind’ in an AI rat race, and a fear of straightening one’s back” that had culminated in a “retarding of IP rights and bombing us back to the pre-Enlightenment era”. “We rarely have the best people in positions of political power, but rather shirkers who do not realise that when you are afraid, that is precisely the right time to fight back.”

Earlier this week, creators’ champion Baroness Kidron was asked by ITV’s Tom Bradby why UK ministers had appeared to be “siding with the tech bros” in their approach to AI and copyright. She replied: “They see it as fundamental to their negotiation with Trump and that tech has Trump’s back. And Trump has tech’s back. If they piss off tech, they piss off Trump, they won’t get their deal. I think that’s huge.” Baroness Kidron — the award-winning movie director Beeban Kidron who sits as an independent in the UK’s upper house — said the government had been “frightened, they’ve been bullied” at a time when Trump was seeking to “recreate the world order”.

Meanwhile, the Washington Post reported that a political fundraising group was being backed by Silicon Valley investors to support ‘pro-AI’ candidates in next year’s mid-term elections. Known as Leading the Future the super PAC — which reportedly already has $100 million to spend — is expected to make another push for a ban on US states from passing or enforcing AI laws and regulations.

🌟 KEY TAKEAWAY: Trump’s monarchical ‘TRUTH’ — a sort of executive order by social media — shows his capricious nature. Though he didn’t mention copyright laws in his list of ‘burdensome regulations’ designed to “harm or discriminate against” US tech firms it’s likely he’ll find a way to include them, and soon. Radsch and George are right: now is the time to stand up and not cower. The UK and EU thought their trade deals were in the bag. Politicians now need to decide between maintaining the sovereignty of their nations, or bending to the will of the broligarchy.

RELATED:

PLUS:

➡ Advocacy groups urge a Grok ban as it’s ‘neither objective nor neutral’

➡ Colorado lawmakers postpone state’s AI law after failure to agree tweaks

➡ Ex-Meta chief Sir Nick Clegg warns of Big AI’s ‘extensive social impact’

➡ US Senator proposing NO FAKES Act fights to get a deepfake removed

➡ Michigan governor Gretchen Whitmer signs law banning deepfake porn

➡ Ousted US Copyright Office head is denied another bid to win job back

➡ Electricity prices surge in the US as AI datacentres put pressure on grid

➡ Leader of Australia’s trade unions claims AI ‘breakthrough’ in tech talks

➡ Just one UK tech firm in AI working groups dominated by US hi-techs

➡ UK tech secretary ‘discussed giving every citizen premium ChatGPT’

ALSO THIS WEEK

📰 AI & MEDIA

THE INDEPENDENT publisher of Trusted Reviews this week spoke of his “frustration and infuriation” after the tech website was scraped by OpenAI’s crawler 1.6 million times in a single day. Chris Dicker, CEO at CANDR Media Group and a board member of the Independent Publishers Alliance, said Trusted Reviews had maintained an up-to-date robots.txt file telling OpenAI it didn’t want to be scraped — yet the crawlers went ahead regardless.

Dicker told Charting Gen AI the consequences were immediate, creating a “massive strain on our hosting infrastructure” as well as “a poorer experience for our genuine visitors and costs that we, not OpenAI, were left to absorb”. “Out of 1.6 million scrapes, we saw just 603 users arrive on site, a click-through rate (CTR) of just 0.037%. That’s dramatically lower than you would expect from traditional search. So not only is this activity unlawful and costly, but the quality of traffic generated is far below the value of user we see from legitimate search.”

Dicker asked ChatGPT to estimate opportunity and other costs associated with the unauthorised scraping. The figure was put between £8,600 and £27,500, said Dicker, who’d asked OpenAI’s intellectual property chief Tom Rubin “to whom should I send the bill?” Asked how it made him feel as a publisher, he replied: “Frustrated, infuriated, all of the above. It’s time for regulators to step in and stop this nonsense”. As for CANDR Media Group’s own potential next steps against OpenAI, he said: “Nothing is off the table right now.”

🌟 KEY TAKEAWAY: The Independent Publishers Alliance is party to the landmark complaint lodged with the UK competition watchdog claiming Google’s AI-written search summaries are causing “serious irreparable harm” to the UK’s news industry (see Charting #61). Dicker is right, it’s time the Competition and Markets Authority (CMA) now looked at the behaviour of the AI scrapers.

PLUS:

➡ Perplexity says it has $42.5 million to share in latest publisher scheme

➡ DMG Media tells UK competition watchdog Google traffic is down 89%

➡ News publishers in Europe and Canada demand end of AI ‘strip mining’

➡ Camb.ai unveils live news translation product supporting 150 languages

➡ Tow Center for Digital Journalism reveals AI is poor at verifying photos

➡ What’s usurping SEO in generative search? New acronyms: GEO & AEO

➡ Washington Post enlists librarians to test AI accuracy. The winner is …

👩⚖️ AI IN THE COURTS

ANTHROPIC’S SETTLEMENT of a major copyright lawsuit that had threatened to land the AI start-up with crippling damages has been welcomed by the News/Media Alliance, the voice of America’s news and magazine industries. As we reported in Wednesday’s Quick Take, the settlement was announced ahead of a high-stakes trial due to take place in December. In a statement, News/Media Alliance president and CEO Danielle Coffey said the settlement — the financial amount will be known next week — reflected “a growing recognition that content creators must be compensated when their works are used by AI companies”.

“Big Tech needs to work with us in a sustainable way because it needs the content we create. By establishing a clear set of rules around content use and compensation, publishers, journalists, authors, and other creators can keep creating the kind of content that consumers and AI companies value,” she added.

MORE REACTION — OUR QUICK TAKE:

PLUS:

➡ Nikkei and Asahi Shimbun sue Perplexity over copyright infringement

➡ Voice actors reach settlement in copyright complaint with Eleven Labs

➡ Brazilian news org Folha de S.Paulo sues OpenAI over copyright claims

➡ Elon Musk’s X and xAI sue Apple and OpenAI over iPhone integration

🎨 AI & CREATIVITY

STREAMING GIANT Netflix has unveiled its gen AI guidelines for producers — a month after admitting its first use of the technology in an original series. That use, to simulate a building collapsing in the making of Argentine dystopian drama The Eternaut, prompted speculation over which model was deployed.

That question hasn’t been answered by the guidelines which essentially explain when production partners can use generative tools, and who they need to inform. Most low-risk use cases such as creating mood boards and ideating concepts are unlikely to require approval, so long as key principles are adhered to.

They include ensuring that outputs “do not replicate or substantially recreate identifiable characteristics of unowned or copyrighted material, or infringe any copyright-protected works” and that tools used do not “store, reuse, or train on production data inputs or outputs”. Critically, generative AI is not to be used “to replace or generate new talent performances or union-covered work without consent”. Use cases that will always require written approval include the use of Netflix owned materials, such as unreleased content, scripts or production images, as well as personal data such as cast or crew details, as prompts.

🌟 KEY TAKEAWAY: The guidelines stress the need to maintain audience trust, saying if used without care gen AI can “blur the line between fiction and reality or unintentionally mislead viewers”. “That’s why we ask you to consider both the intent and the impact of your AI-generated content.” Producers should “avoid creating content that could be mistaken for real events, people, or statements if they never actually occurred”. And they should always ensure that gen AI “does not replace or materially impact work typically done by union-represented individuals, including actors, writers, or crew members, without proper approvals or agreements.”

PLUS:

➡ Beatoven.ai unveils fully licensed and ethically trained music generator

➡ YouTube is ‘secretly’ applying AI to tidy creators’ videos, BBC reports

➡ Under-fire Midjourney strikes image-generation partnership with Meta

➡ University of South Wales defends new generative AI for artists course

➡ UK council is slammed for using AI images to promote creative courses

➡ Victory for voice artist as ScotRail pledges to remove AI-cloned double

QUOTES OF THE WEEK

“The strange logic of the AI art defenders, for all their claims of liberation and democratisation, ultimately champions a tool that standardises output and severs the essential connection between life and art. An artist’s output is not a recombination of styles but a synthesis of their entire being, something that lives outside the capabilities of 1s and 0s pattern recognition.” — Derek McArthur, engagement editor at The Herald, on those who are happy to support the replacement of artists by generative AI

“I still maintain that audiences want humans communicating with them, not just a machine or a device. I have to believe you’re always going to want a messy human in the mix. People get obsessed with actors because they like to speculate about what’s going on with their souls — and you can’t do that with AI.” — Black Mirror writer Charlie Brooker speaking at the Edinburgh TV Festival

AND FINALLY …

GOOGLE’S AI OVERVIEWS once advised people to put glue on pizza. Now the hi-tech’s AI-written summaries are recommending pizza deals that don’t exist. Stefanina’s restaurant in Wentzville, Missouri, used Facebook to warn customers not to rely on Google’s generative search results since they were promoting specials that had expired, understandably prompting confusion and anger:

Restaurant owner Eva Gannon told local TV news station FirstAlert4 that Google was also making a mess of certain menu items. “It’s coming back on us,” she said. “As a small business, we can’t honour a Google AI special.”

AI Overviews carry a disclaimer at the bottom in the tiniest font: “AI responses may include mistakes.” Those who could be bothered to click and learn more are informed that “AI Overviews use generative AI, which is a type of artificial intelligence that learns patterns and structures from the data it is trained on and uses that to create something new.” Create something new? We can debate that another day. “While exciting, this technology is rapidly evolving and improving, and may provide inaccurate or offensive information,” says Google, adding: “AI Overviews can and will make mistakes.”

Imagine if Stefanina’s took the same approach and just made up orders. Patrons would rightly give them a pizza their mind. But Google? Does anyone care?